|

My name is Hongchao Fang ( 方红超 ), a first-year PhD student at Penn State University. I am glad to be supervised by Prof. Wenpeng Yin. Before PSU, I got my Master degree in Computer Science at Northeastern University and my B.Eng. degree from Central University of Finance and Economics, China. I am fortunate to work under the supervision of Dr. Min, Prof. Hannaneh in UW, Weiyan Shi from Stanford University, and Prof. Xie in UCSD. I am actively looking for research internships next summer(2025 summer). Feel free to email me if you have openings. Scholar / Email / LinkedIn / Github / Curriculum Vitae |

|

|

|

My research focuses on customized Large Language Models with two directions: LLM with domain knowledge and LLM with certain personalities. I’m currently interested in applying self-supervised learning on generation tasks to explore large language models' ability to learn personality from domain dialogues. |

|

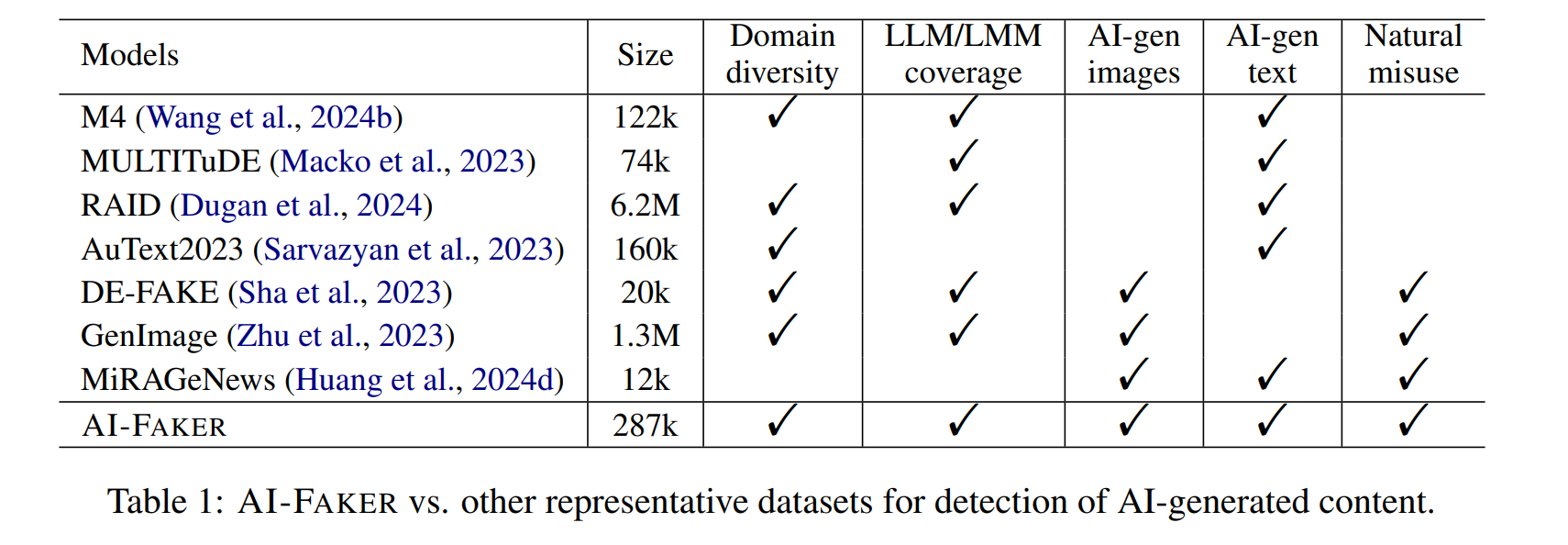

Hongchao Fang, Yixin Liu, Jiangshu Du, Can Qin, Ran Xu, Feng Liu, Lichao Sun, Dongwon Lee, Lifu Huang, Wenpeng Yin preprint [paper] we introduce AI-FAKER, a comprehensive multimodal dataset with over 280,000 samples spanning multiple LLMs and LMMs, covering both general and malicious use cases for AI-generated images and texts. |

|

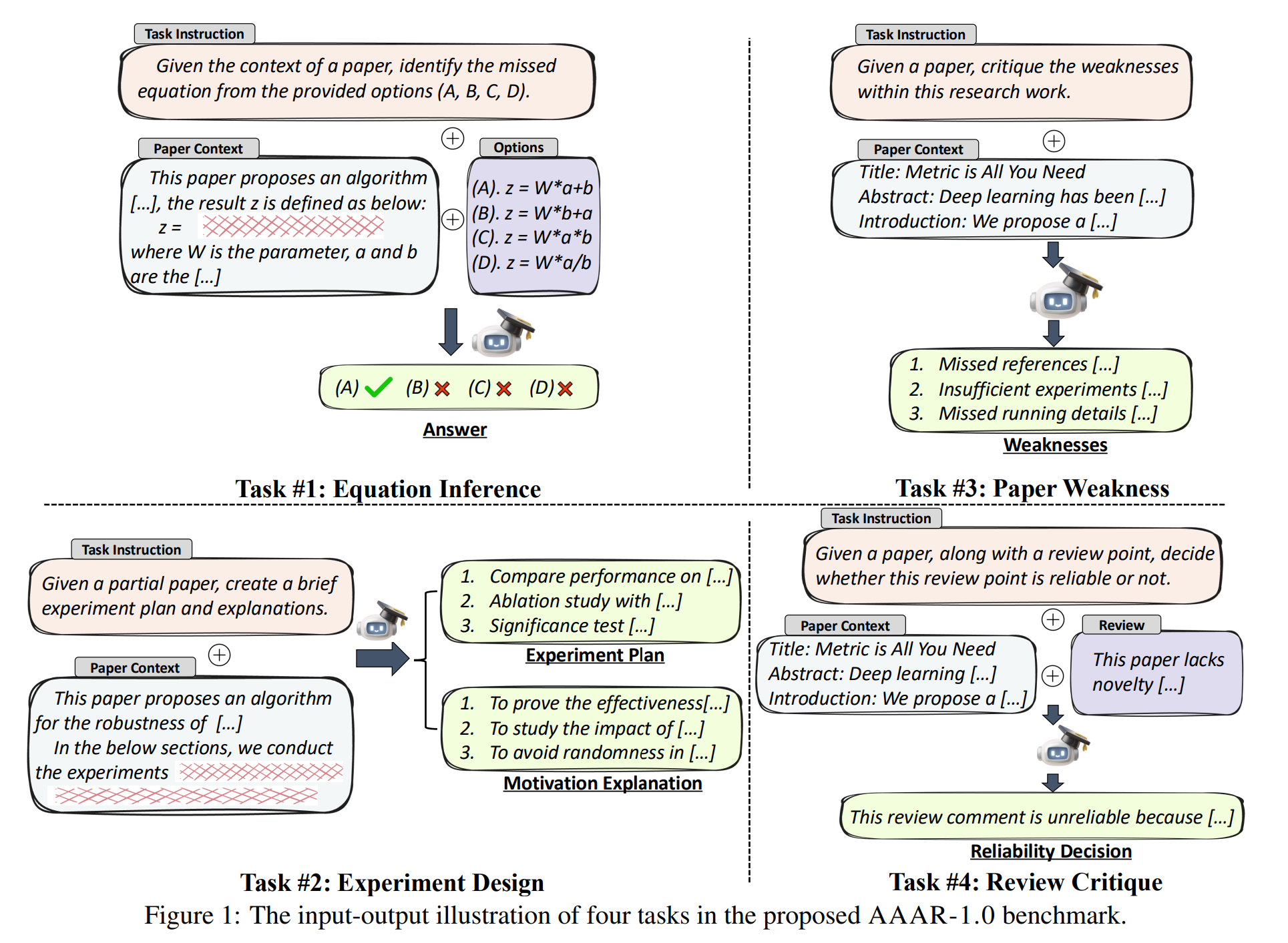

Renze Lou, Hanzi Xu, Sijia Wang, Jiangshu Du, Ryo Kamoi, Xiaoxin Lu, Jian Xie, Yuxuan Sun, Yusen Zhang, Jihyun Janice Ahn, Hongchao Fangstrong>, Zhuoyang Zou, Wenchao Ma, Xi Li, Kai Zhang, Congying Xia, Lifu Huang, Wenpeng Yin AAAI [paper] In this study, we introduce AAAR-1.0, a benchmark dataset designed to evaluate LLM performance in three fundamental, expertise-intensive research tasks: (i) EquationInference, assessing the correctness of equations based on the contextual information in paper submissions; (ii) ExperimentDesign, designing experiments to validate research ideas and solutions; (iii) PaperWeakness, identifying weaknesses in paper submissions; and (iv) REVIEWCRITIQUE, identifying each segment in human reviews is deficient or not. |

|

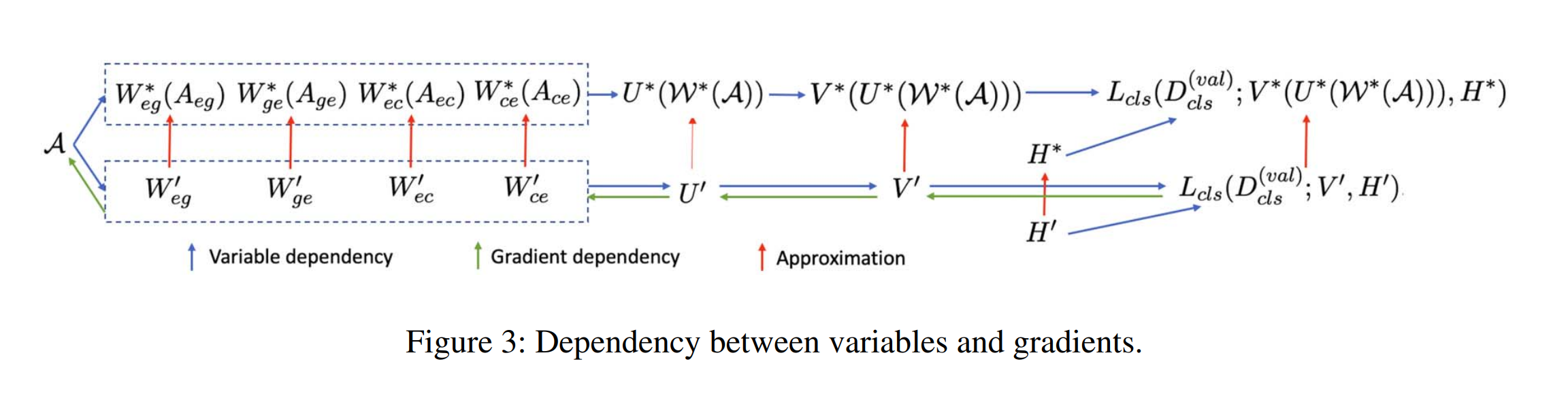

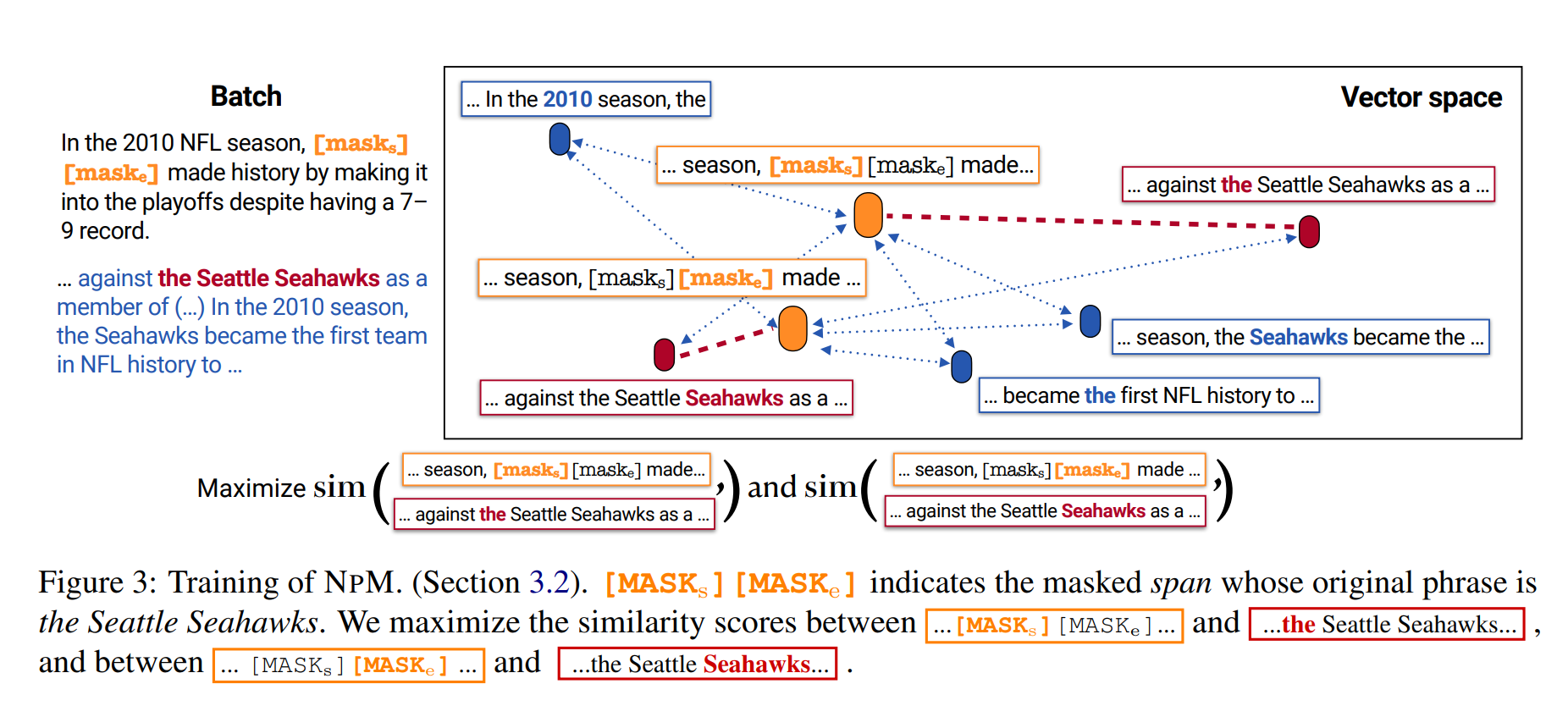

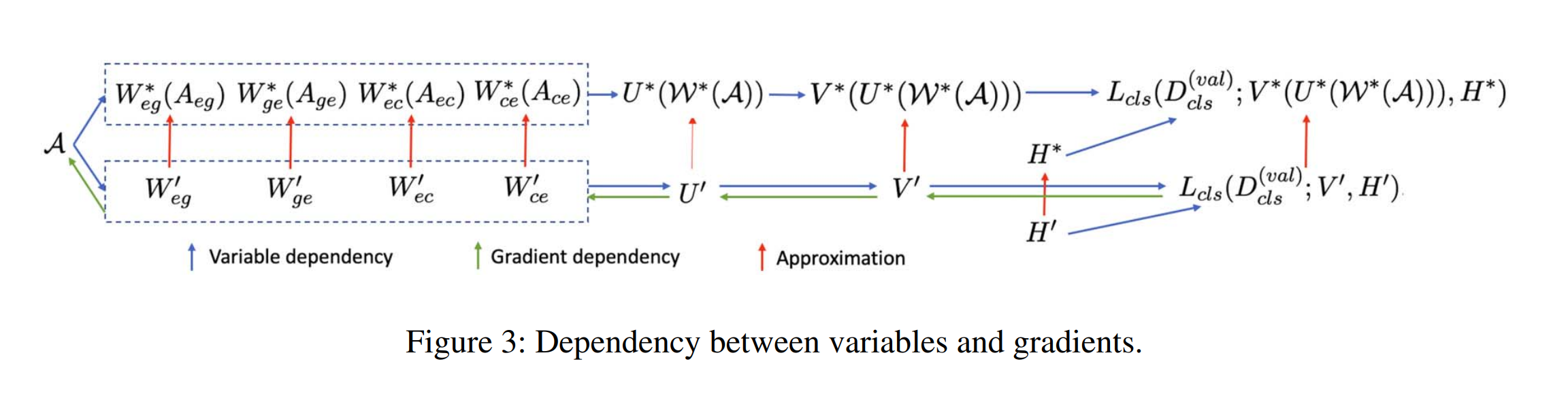

Hongchao Fang, Pengtao Xie Transactions of the Association for Computational Linguistics 2022; 10 1324–1340 [paper] We propose a four-level optimization framework that performs data augmentation and contrastive learning end-to-end, to enable the augmented data to be tailored to the contrastive learning task. |

|

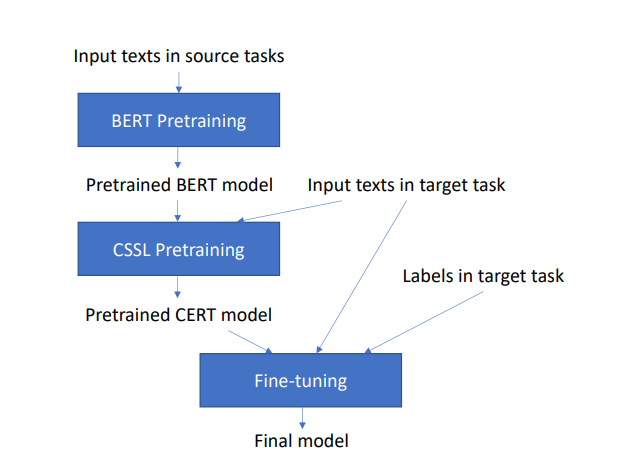

Hongchao Fang, Pengtao Xie Arxiv Preprint [paper] [code] We propose CERT: Contrastive self-supervised Encoder Representations from Transformers, which pertrains language representation models using contrastive self-supervised learning at the sentence level |

|

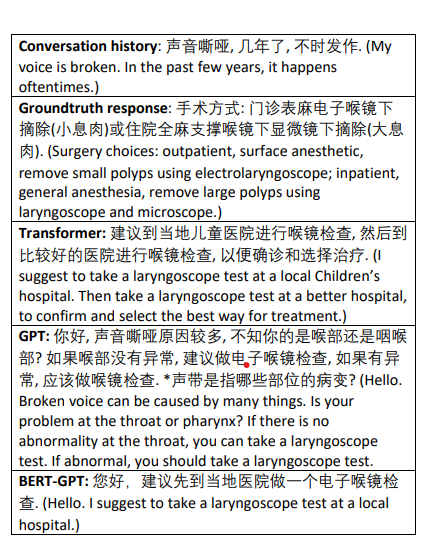

Guangtao Zeng, Wenmian Yang, Zeqian Ju, Yue Yang, Sicheng Wang, Ruisi Zhang, Meng Zhou, Jiaqi Zeng, Xiangyu Dong, Ruoyu Zhang, Hongchao Fang, Penghui Zhu, Shu Chen and Pengtao Xie Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) [paper][code] We build large-scale medical dialogue datasets – MedDialog, which is the largest medical dialogue dataset to date. We pre-train several dialogue generation models on the Chinese MedDialog dataset, including Transformer, GPT, BERT-GPT, and compare their performance. It is shown that models trained on MedDialog are able to generate clinically correct and human-like medical dialogues. We also study the transferability of models trained on MedDialog to low-resource medical dialogue generation tasks. It is shown that via transfer learning which finetunes the models pre-trained on MedDialog, the performance of medical dialogue generation tasks with small datasets can be greatly improved, as shown in human evaluation and automatic evaluation. |

|

Intern Project at Stanford. |

|

Intern Project at UW. |

|

Intern Project at UCSD. |

|

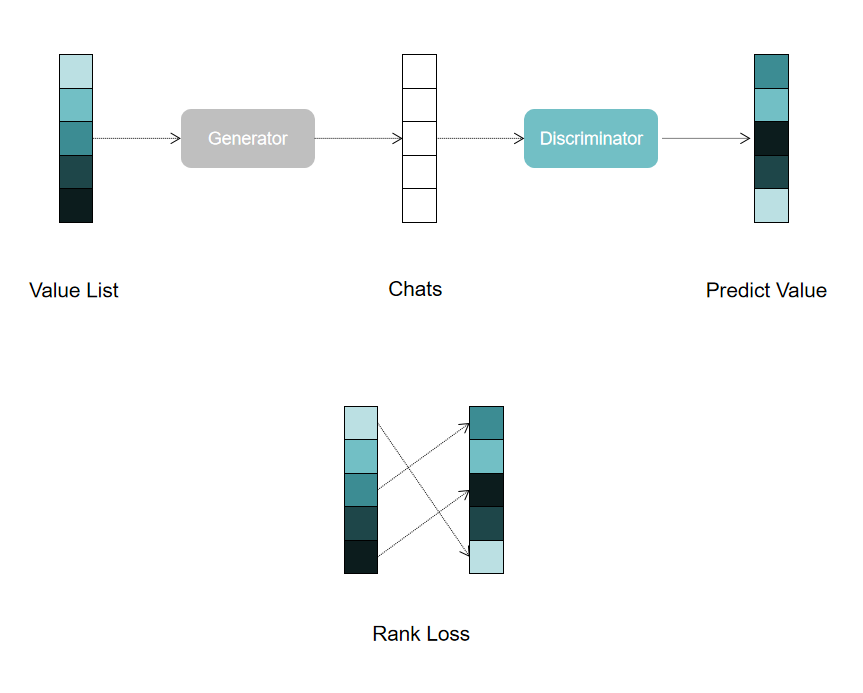

Intern Project at ICT, Chinese Academy of Sciences. |

|

Software Engineer Internship at Amazon. |

|

|

|

Website design from Jon Barron |